The recent rapid acceleration of Generative Artificial Intelligence (GenAI) has democratized AI for all of us. Heralded as the next Industrial Revolution, it will disrupt and transform how we do business, and what business we do. In the first of a two-part blog, we take a closer look at the benefits and risks that come with embracing new technologies

AI is the buzzword of the moment. Reports on the rise of new technologies and the ways in which AI will revolutionize virus research, dominate the employment landscape, or be weaponized for deep fakes have filled the headlines in recent months.

The reality is, of course, more complex. AI has been with us in some form or another for decades. It is the recent advent of GenAI in the public sphere that has signalled a paradigm change.

As a result, businesses are grasping new opportunities that AI offers while simultaneously grappling to understand and manage the full risk landscape that it operates within.

A misnomer: AI is not ‘intelligent’

To make effective decisions regarding the introduction of AI into your business, it is essential to understand what it is. It is not intelligent, as you and I understand that word.

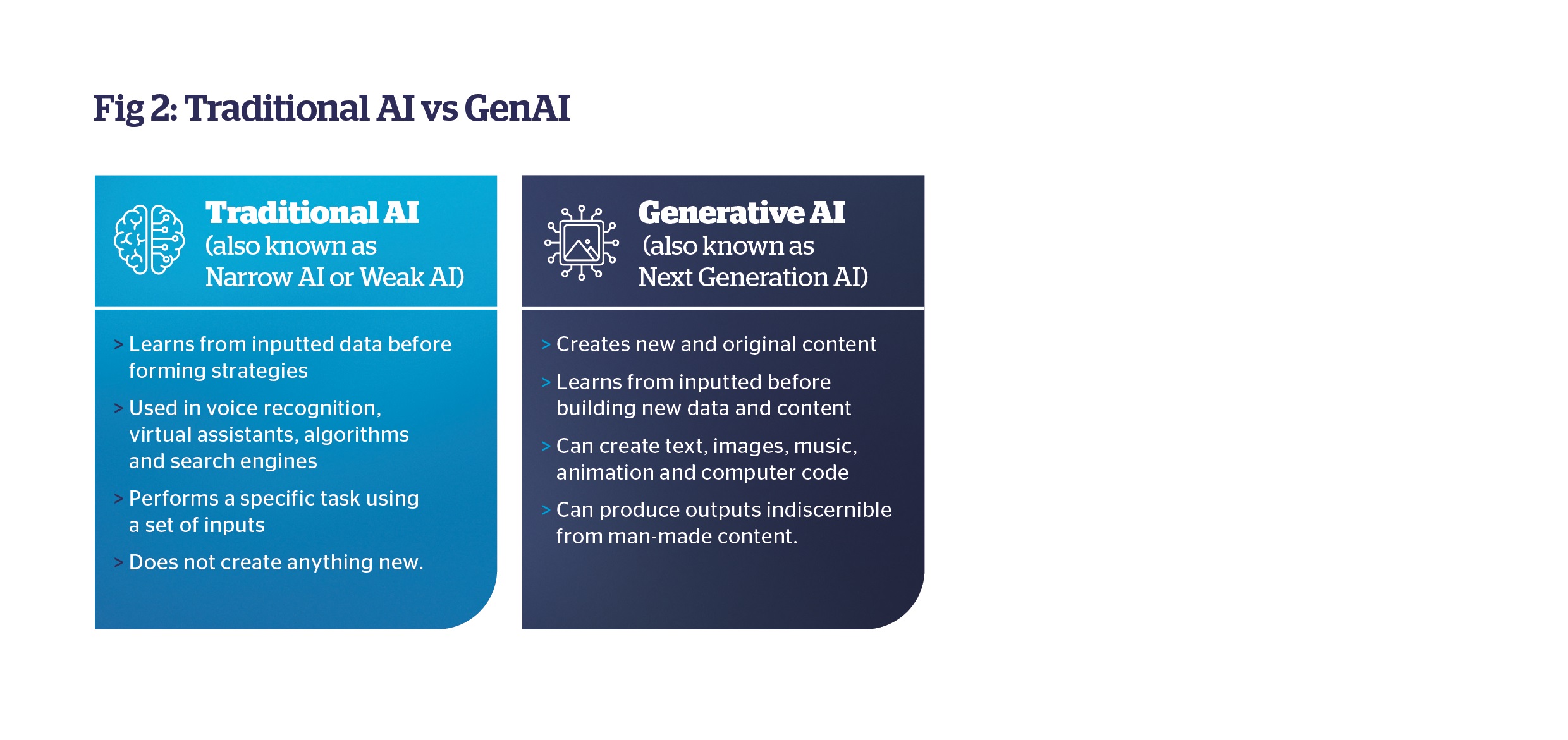

AI is an umbrella term that describes a variety of machine-learning technologies, including Deep Learning, Neuro Linguistic Programming, and Computer Vision (the processing and analysis of images rather than words).

In short, AI recognizes patterns, and GenAI creates new patterns.[1]

Take the mobile phone; for years these devices have used a predictive text function, a basic form of machine learning technology which used statistical modelling to anticipate the next letters or word. GenAI (which includes OpenAI’s most known model, ChatGPT) is an advanced form of this AI.

GenAI models are trained on extensive sets of data to learn underlying patterns, enabling them to create new data based on those patterns. It is a constantly iterative process, which means that the models are continuously becoming more sophisticated.

Notwithstanding its incredible sophistication and power, it is not ‘intelligent’. What it does well is process huge swathes of data – and apply that using complex series of algorithms. It is less adept at thinking ‘laterally’ or outside its designed functionality – we must remember that text GenAI does not understand either the input phrase or the text response that it generates.

But AI is not a fad, and it will continue to evolve – verification will be one of many risk factors. As this new technology emerges, businesses must take the time to understand its impact from both risk and benefit perspectives, to avoid the risk of inadvertently exposing their organization or falling behind.

AI Risks & Rewards

Integrating GenAI with business operations

1. Where is AI going to be most useful in my business?

Depending on the sector, GenAI can be used effectively to streamline internal operations, or client-servicing areas.

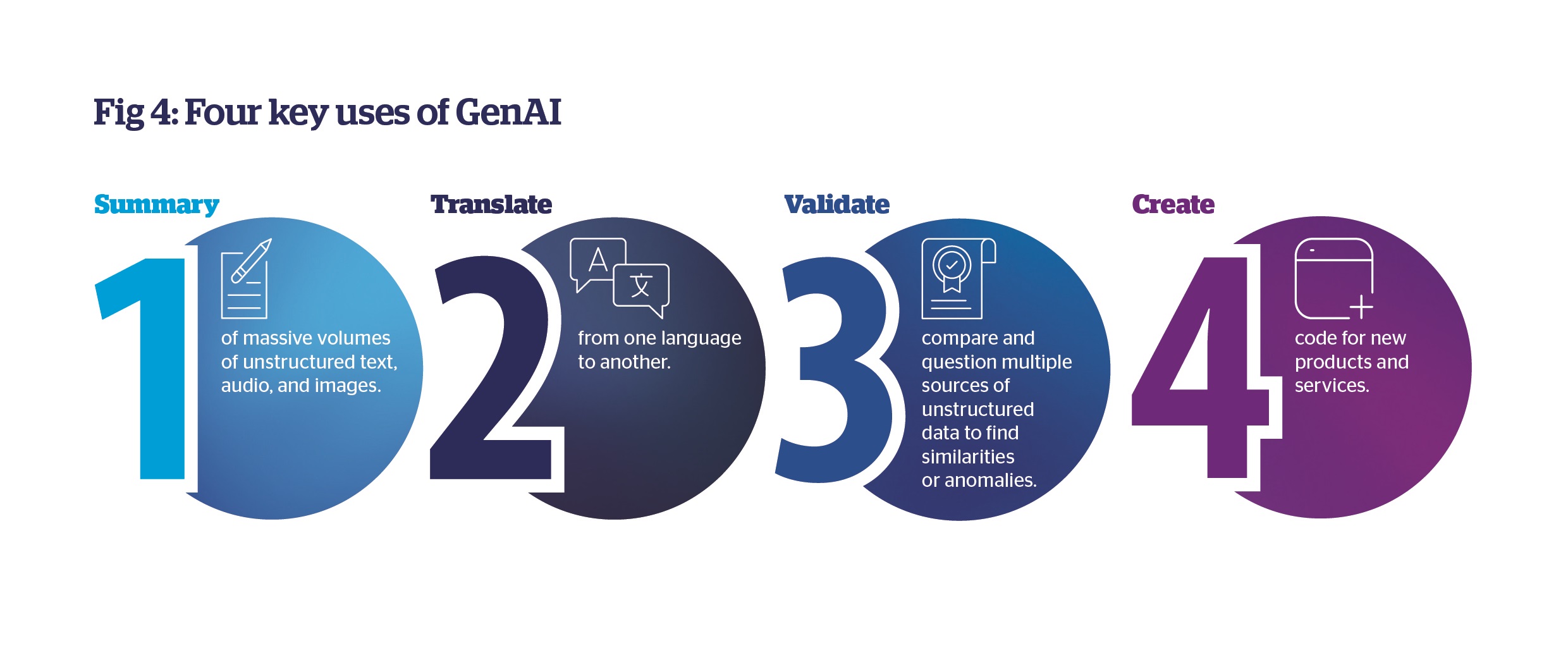

The uses of generative AI can be grouped into four areas:

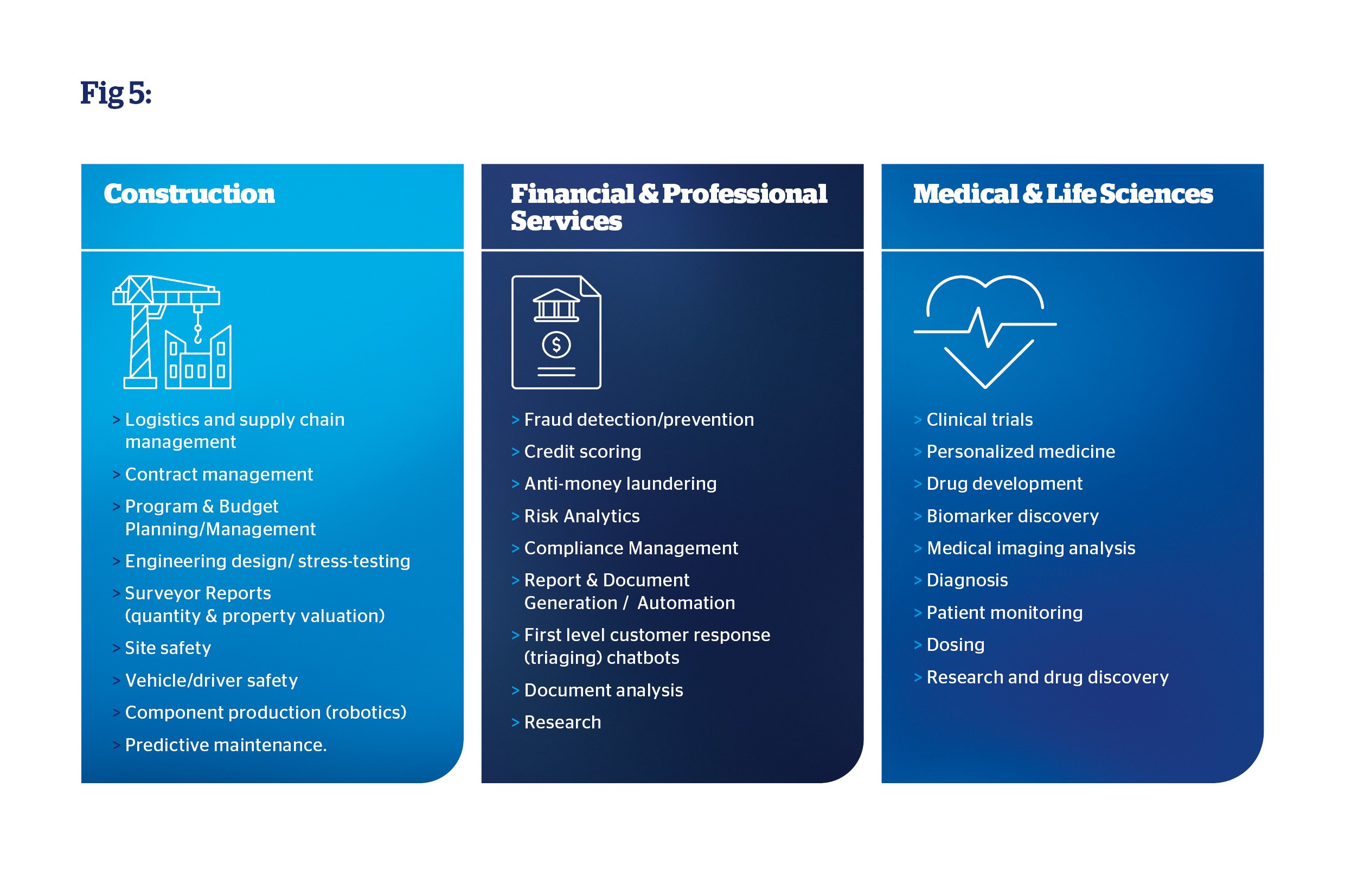

Areas where there is high potential for adoption of AI include:

- Content generation

- Processing common data-related tasks, including data analysis

- Responding to common queries using chatbots

- Predictive modelling

- Quality control

- Fraud detection

Some sector-specific examples of AI uses:

2. How could AI change the way we work?

We are only at the very start of the GenAI journey. The World Economic Forum (WEF) estimates that AI and other economic drivers will result in 83 million job losses between 2023 and 2028 – but it also estimates that 97 million jobs will be created.[2]

Particularly for professional businesses, AI may not necessarily remove the work, but rather change the emphasis of the work, and how a task is structured. Co-pilot tools are designed to support a range of activities, reduce resource burdens, and improve both outcomes and quality via automation.

This support offers intelligent insights to draw from and unlocks more time for risk professionals to apply their core skills as needed. Data tasks that previously took many hours can be undertaken in seconds. This can free senior staff time to focus on more effective application of that data analysis, for example in medical and life sciences research.

In 2022 the UK regulator asked all carriers for their exposure to buildings over five floors, with aluminium composite panels (ACP) on the exterior walls. Using AI vision the QBE underwriters were able to triage their entire portfolio to find buildings with five floors or more and exterior cladding. A specialist engineer was able to then review this subset of properties to establish if the cladding was ACP or another type of cladding. With AI augmenting the underwriter and risk engineer, what could have taken weeks was completed within the week.

From a risk perspective, some roles will be more verification orientated – for example, rather than producing a property report, a surveyor may be required to verify the veracity of the AI-generated report. Similarly, a lawyer may have to check the AI legal analysis rather than produce it themselves. We are already seeing AI used in the co-production of legal reports for property transactions, where AI tools are increasingly being used to scrape search databases to build automated reports.

Although GenAI should not replace as many jobs as might be predicted in mainstream media, it will nonetheless change the role-demographics of most organizations – and job specifications will change radically over time.

3. What are insurers concerned about as growth soars for AI?

The insurance industry is not resistant to the benefits and advantages of AI – these emerging technologies will be instrumental in improving risk management, especially in areas including checking source code or legal documents.

However, there are four types of risks that businesses should be aware of as they look to integrate AI into operations:

- Model input risks - incomplete, inaccurate, and biased data used to train the model will result in a poor outcome from the model

- Model output risks - generated content can be fictious, unverifiable, and inaccurate.

- Operational risks - uploading commercially sensitive content, applying outcomes without oversight, management of a plethora of models across the organization.

- Existential risks - growth in political interference, corporate and individual cyber-attacks through the creation of fake news, media and images to control, extort and or influence an outcome.

Accuracy and overreliance

In a competitive and cash-constrained economy, pricing competition could lead to overreliance on AI tools without adequate oversight. In some, highly controlled AI applications (where the data search is restricted to limited and defined fields in highly reliable and structured data sources), the product may be safe without significant review. In open-ended applications, an intensive review process will be essential.

In many cases it is easier and more effective for a practitioner to undertake the relevant research and then apply it – as it is more likely to be effectively embedded in their minds, thereby making analysis more robust. Checking a document is less likely to engage the brain, and more likely to result in errors. It also de-skills future workforces, making the role of checking outputs increasingly difficult over time.

Scaling of errors

AI systems rely on a) the programming of instructions; b) the quality of data accessed; c) how recent the data is.

This last is, we suspect, the easiest for AI systems to address. While current systems such as Chat GPT are updated to 2021, it is highly likely that data will be close to real time within a relatively short time.

More problematic are the biases and extent of poor data. The internet hosts highly contested and extremist materials alongside accurate sources of information, while inherent biases exist within current data, based on decades of narrowly Anglo-centric output. Problems may originate within data-sources or relate to data interrogation, so businesses must consider how the replication of errors may spread in a very short timescale, leading to potentially hefty claims.

Client data security

Firms that do not invest in sector-tailored AI systems may risk client data input itself being ‘scraped’ by the AI tool. A case in point: The New York Times is currently pursuing OpenAI for unauthorized use of its intellectual property.

Client data input into a generic AI tool is highly likely to be a breach of data protection rights. Having clear and effective policies on how and when AI tools can be used – and which tools are authorized for use – will be a critical element in risk management frameworks.

Fraud and deepfakes

AI risks are not simply about your own use of AI. Malign actors will also harness its capabilities to create highly convincing scams, including the use of voice, face, and video records that can be indistinguishable from the real thing.

While security will evolve in parallel, there is very real concern that data sources could be infiltrated and corrupted, and deepfakes created that facilitate frauds at a level not previously seen. Keeping abreast of these issues and adopting the latest risk controls will be essential for businesses wishing to protect themselves.

Businesses may also consider ‘passive use’ – where third parties may use your data, or in the worst-case scenario, where bad actors use it to facilitate cybercrime.

Regulatory evolution

Governments and regulators are still forming their views on how best to regulate AI. Within the UK there is a dependency upon the existing regulatory bodies, whereas within Europe there is a harder regulatory commitment under the EU AI Act 2024. North America differs by state and Canada has a harder regulatory position.

This will evolve and organizations would do well to prepare for a hardening of the regulatory landscape in years to come as AI becomes part of our business and society.

AI is not a choice. It will become a business inevitability. A recent survey by Andreessen Horowitz found that enterprise budgets for GenAI have doubled in 2024 and many businesses are finding the funds to capitalize on the opportunity[3].

AI investment and use should be considered and strategic, within an approved framework aligned with your customer and business needs.

If you are taking an early adopter approach, consider an ‘Agile’ approach:

- Consider applications that may transform internal operations before moving on to direct customer facing deliverables.

- Start small, evaluate the impact – and adjust your approach as needed. Extend if working well.

- Ensure your ‘proof of concept’ measures are set widely and include medium- and long-term measures alongside immediate deliverables.

- Always ensure there is human oversight on any use case.

[1] WEF_Jobs_of_Tomorrow_Generative_AI_2023.pdf (weforum.org)

[2] https://www.forbes.com/sites/bernardmarr/2023/07/24/the-difference-between-generative-ai-and-traditional-ai-an-easy-explanation-for-anyone/

[3] 16 Changes to the Way Enterprises Are Building and Buying Generative AI | Andreessen Horowitz (a16z.com)

*Misinformation and disinformation is a new leader of the top 10 rankings this year. No longer requiring a niche skill set, easy-to-use interfaces to large-scale artificial intelligence (AI) models have already enabled an explosion in falsified information and so-called ‘synthetic’ content, from sophisticated voice cloning to counterfeit websites – WEF_The_Global_Risks_Report_2024.pdf (weforum.org)